At one point in the first episode of Westworld — HBO’s new sci-fi Western set in a theme park populated by robots who exist for the perverse entertainment of the wealthy human visitors to the park — the park’s head writer complains to his boss about the latest updates to the “hosts,” as they’re called. “Ford and Bernard keep making the things more lifelike,” he said. “But does anyone truly want that?”

Well, yes and no. For as long as humans have considered the fantastic possibilities of artificial intelligence, we’ve feared the consequences of what might happen if that AI became too intelligent, too human. (Mars enthusiast and Tesla CEO Elon Musk neatly encompasses both sides of the coin, developing self-driving cars while tweeting that AI is “potentially more dangerous than nukes.”)

How dangerous Westworld’s robots turn out to be remains to be seen, but it’s already clear that these machines are making their way toward sentience. And the odds seem good that whatever comes from this awakening won’t work out too well for the humans involved. As robot Dolores Abernathy, played by Evan Rachel Wood, puts it, paraphrasing Shakespeare, “These violent desires have violent ends.”

So how realistic is all of this, and when should we start preparing for the robocalypse? We caught up with futurist Martin Ford, author of Rise of the Robots, to discuss this, and other questions about our future with robots.

To begin with, what do you think of the technology on the show so far? How realistic does it seem?

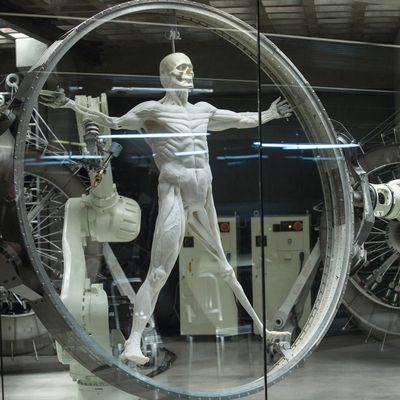

There are two separate technical issues here. One is the artificial intelligence, and the other is building a robot that looks that much like a human being, a robot that you could touch and think is a human is also a giant technological leap. These machines probably have some biological component, that’s why they keep them in cold storage — maybe its real skin or something. All that stuff is way out there in the future.

One of the themes of the show is that you really can’t tell the machines from people, and I think that’s a situation that we’re going to run into in the not-too-distant future. But it’s not going to be physical robots, it’s going to be virtual interaction, maybe with a chatbot, maybe in the virtual-reality world. You can argue that you already see that to some extent already. There’s a chatbot already that’s very popular in China that people spend hours and hours talking to, and you’re going to see more and more of that. The line between what’s human and what’s machine is going to blur increasingly.

So would you say we’re closer to developing realistic artificial intelligence than we are to building a robot that looks like one of the robots in the show?

Maybe. But building a truly intelligent machine, a machine that really has the kind of holistic intelligence that a person has, is still an enormous challenge, something that lies far in the future. Some people think that will never happen, but I would guess that if it does happen it’s at a minimum of 30 years in the future, maybe more like 50 years.

Do you think if we ever do develop AI with true intelligence, it will inevitably come with the kinds of problems the show seems poised to explore: robots with growing self-awareness and a desire to act in a way that goes against their programming?

You would think that those challenges would very likely come up. It’s very speculative. We’re talking about something that has never been done and may never be done. There is definitely a group of very smart people who are focused on that and, in particular, are very concerned about the potential existential threat from artificial intelligence, if we ever do build machines that can think for themselves and make decisions for themselves.

There’s one scene in the show where Anthony Hopkins is interviewing one of the machines and asks it “What are your drives?” and the robot talks about taking care of his family and so on. That’s one of the things that people who worry about the future of artificial intelligence have focused on: that if you have an artificial intelligence with a programmed drive, that drive could have very unpredictable consequences. One of the classic examples is an artificial intelligence that optimizes the production of paper clips. That sounds very simple and narrow, but if you take that to the extreme, the AI might decide to consume all the resources of the earth and destroy humanity in order to produce an unlimited quantity of paper clips. So you have to be very careful about that — if you give a truly intelligent AI a certain drive to make it want to do something, you have to consider all the implications of that.

There are whole books that have been written on this issue of, “How do you control AI if it really reaches that point where it becomes genuinely intelligent?” Most people assume that once you reach the point of building a machine that is as intelligent as a human, that very rapidly it will become smarter than any human. People assume there might be an iterative process where the machine might try to work on its own design and make itself even smarter, and that’s what people worry about. So there are a lot of issues there that I think are real, but they are also, at this time, clearly science fiction.

If the technology within Westworld is so advanced, what’s your best guess at what the rest of this society looks like, outside the theme park?

It’s definitely set in a society where amazing things have happened in other realms, too. I would assume that you’ve got amazing advances in medicine and biotechnology. I’m pretty sure the society would have self-driving cars, for example. The issue that I focused on in my book, The Rise of the Robots, is nearer-term stuff. I’m focused on the impact of specialized or narrow artificial intelligence. We’re talking about things like self-driving cars and trucks. Robots that make hamburgers. Machines that automate all kinds of work in factories and warehouses. Specialized algorithms that can automate all kinds of white-collar work, like the systems that can crank out news stories. That’s what’s going to unfold in the next ten to 20 years.

So what does the world look like outside in this show? All that specialized stuff is in place already, because those advances are really a precursor to this much more advanced human-like artificial intelligence. And I believe that it’s a world where there aren’t enough jobs to go around, so if they’ve still got a thriving society — a society that’s not coming apart, with a healthy economy — they’ve probably found some way to adapt to that, like maybe a guaranteed income. Because, to be clear, if you’ve got machines as advanced as what you have in this amusement park, then surely you’ve got technology outside that can do a whole lot of what people are doing for work now.

You do have to wonder why the human characters are so interested in coming to the theme park. Maybe it’s because things are pretty bad outside.

Probably in the future it’s going to be a lot easier and cheaper and more practical to just enter a virtual reality and have these kinds of experiences than actually go to a physical place with physical robots — that would certainly be my guess about how things are really going to progress. So maybe part of what’s going on is that in the world outside the theme park, everyone lives in a virtual world and there’s some subgroup of people who want to leave that and have a real hard experience in the physical realm.

I see the potential for things to go that way. One of the trends we’ve noticed in the U.S. is that there are huge numbers of young men who are not working and they’re not in school, so what are these people doing? There was a recent study that was done that tried to answer that question, and it turns out that what they’re doing is spending enormous amounts of time playing video games. So you can imagine that when video games transform into virtual reality and that becomes more and more real, more and more addictive, that could be a real issue. There are a lot of real implications that haven’t really been thought through yet. Are we going to end up in a world where everyone just plugs into VR and nobody works anymore until the lights go off?

Do you think that AI developing consciousness becomes inevitable at a certain point?

A lot of this technology is being driven by people who are attempting to reverse engineer the human brain. So if, in fact, we’re going to figure out how the brain works and somehow replicate that in a machine, there would be a concern that a lot of human qualities would flow from that. Could you build a machine that would be a sociopath? That would be the worst-case scenario. There’s a lot of debate on that.

Some people argue that a conscious machine, a self-aware machine, is not something that’s possible. I don’t agree with that. It seems to me that the human brain is basically a biological machine, and if we can build that machine in some other medium, silicone or something else, I don’t see why that couldn’t become conscious. I do think we could eventually have machines that are both intelligent, perhaps super-intelligent, and conscious. And at that point things become very unpredictable. It’s hard to see how that will all play out.

Many people view that as part of the process of evolution. Up until this point we’ve had biological evolution, and that’s the tipping point at which evolution is no longer biological — the machines will take over the next step of the process. That’s very frightening, even to me. It might ultimately mean there’s no place for human beings as they existed historically. People like Ray Kurzweil [a fellow futurist and author of The Singularity Is Near, a book that Evan Rachel Wood studied to prepare for her part] say that at that point we merge with the technology, we become something different.

And evolution seems likely to be a big theme in Westworld as well. In the first episode, Anthony Hopkins’s character talks about a mistake in the most recent update on the robots.

When he says mistake, what he means is a mutation, and that’s what drives evolution — random mutations. And in the realm of artificial intelligence, that process could happen much more rapidly than it does for humans. There already are genetic algorithms that basically employ that theory. In computer creativity, for example, there are algorithms that have written original symphonies, there are algorithms that can paint original works of art. People have this bias that machines can’t be creative, that only people can do that — not true. It’s already starting to happen.

And you see that in the show, where you’ve got this ability to improvise. These machines don’t just do a set script: They can improvise, and if they’re improvising, probably what’s going on in their software is something like an evolutionary algorithm, where it’s trying different combinations and evaluating them. That’s the only way they can be creative and try something that’s not preprogrammed.

AI awakening is an old science-fiction trope by now — how do you think this show reflects the fears or desires of the current moment?

If you go all the way back to Blade Runner, it’s the same fear. We’ll build these machines that are supposed to be slaves that do exactly what we tell them to, but then they’ll decide they’re beyond that. It’s a genuine concern. I don’t think it’s the immediate concern. The immediate concern is: What happens to all our jobs when the machines that actually do what they’re supposed to do take over? But as we look out further, and perhaps these machines come to have their own objectives, that’s a real concern.

Can you imagine a positive future of advanced robotics and some form of AI?

If we can leverage all this technology on behalf of humanity, it could be very positive. Part of this is being driven by a very powerful competitive dynamic in the commercial arena. Right now it’s Google versus Amazon, and so on — all these hugely profitable, influential companies with enormous resources. That’s what’s really driving AI development now. But all of this does have military and security applications, and we don’t really know where it’s going to emerge and if it can be controlled.

All of this, of course, has been explored so much in science-fiction movies and novels, and for that reason there’s a tendency to dismiss it. I don’t think that’s the correct approach. But it makes it difficult. For example, imagine a politician talking about how we need to prepare ourselves for artificial intelligence. Yet we do need to treat it seriously: What do we do and how do we control all of this? It’s going to be an enormous challenge.

This interview has been edited and condensed.